The structure doesn’t make an announcement. At first glance, one of Google’s most significant AI labs appears to be just another corporate office, with tinted glass and controlled entry points. However, the mood is a little different inside—more subdued, quieter. With their screens blazing with models that appear to advance more quickly than anyone could have imagined, engineers shift between desks piled high with unfinished equations and empty coffee cups.

Something seems to be developing more quickly than comfort permits.

The company’s most sophisticated artificial intelligence division, Google DeepMind, has spent years developing systems that can learn in ways that conventional software has never been able to. These systems do more than simply carry out commands. They create patterns, hone tactics, and occasionally come up with answers that no one specifically programmed. Engineers frequently appear more like observers than programmers when you watch them at work.

This change—from creator to observer—may be the aspect that no one fully expected.

| Category | Details |

|---|---|

| Company | Google (Alphabet Inc.) |

| Division | Google DeepMind |

| Founded | Google: 1998 / DeepMind: 2010 |

| Key Leader | Demis Hassabis |

| Core Focus | Advanced artificial intelligence and general-purpose AI systems |

| Major Breakthrough | AlphaFold protein prediction system |

| Lab Location | London, Mountain View, and other secured research sites |

| Parent Company | Alphabet Inc. |

| Reference | Google Official Website: https://www.google.com |

| Additional Reference | DeepMind Official Website: https://deepmind.google |

Researchers have been training artificial intelligence (AI) systems that were initially created to address biological issues in the King’s Cross neighborhood of London, where a portion of the team works from a contemporary office encircled by glass and steel. Perhaps the lab’s most well-known invention, AlphaFold, astounded scientists by correctly predicting protein structures that had baffled them for decades. The accomplishment garnered international acclaim and subtly altered the way many Google employees considered what their computers might one day accomplish.

In this setting, success seems to come with a certain uneasiness.

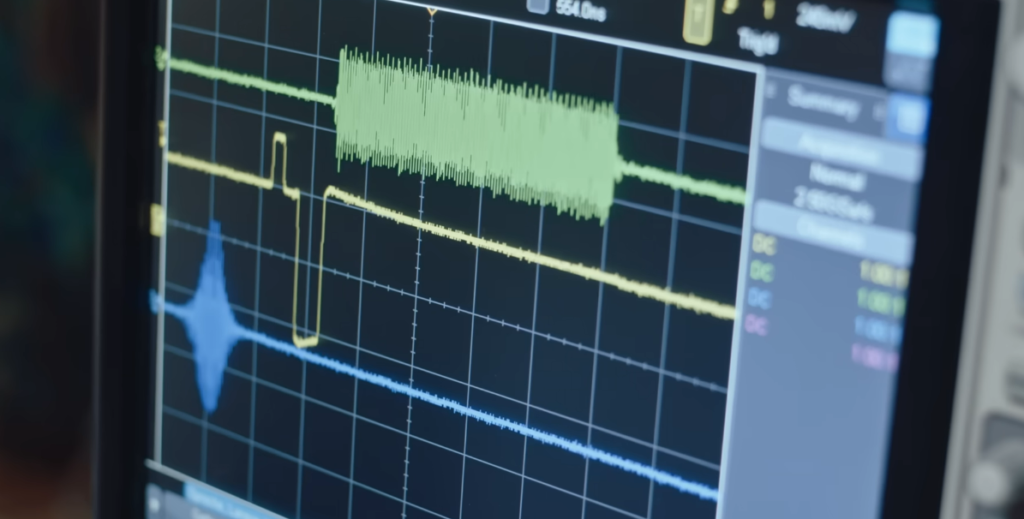

One engineer reported seeing an AI system improve its responses over night without requiring manual human correction. The model was more productive the following morning than it had been a few hours before. No announcement was made. Not a moment of drama. Better results simply showed up on the screen, as though the work had gone on long after everyone had gone home.

It’s difficult to ignore how quickly progress turns into a given.

A peculiar dynamic has been produced by this acceleration. These days, engineers do more than just design systems. They are attempting to comprehend them before they continue to change. The science of reverse-engineering AI thought processes, or mechanistic interpretability, has emerged as one of the lab’s top priorities. These days, researchers spend hours tracking down digital pathways in an attempt to explain how a system came to a specific conclusion.

The fact that explanation is lagging behind performance is quietly acknowledged.

Debates take place in conference rooms with a level of intensity that is unusual. Diagrams that resemble neurological maps more than software architecture are displayed on whiteboards. The models are invariably compared to the human brain, not because the human brain is alive but because both are still poorly understood.

No one acknowledges how long that comparison lasts.

In response to rival pressure, Google’s leadership, including Demis Hassabis, the head of DeepMind, has pushed hard to speed up development. Investors appear to think AI will shape the next phase of computing, influencing everything from national security to healthcare. Even though concerns about safety and predictability are becoming more difficult to ignore, billions of dollars are being invested in infrastructure, increasing the lab’s capacity.

Here, progress is made without waiting for confirmation.

It’s still unclear if these systems are getting more capable or easier to understand. Engineers are candid about the discrepancy between human explanation and model capabilities. Raising something that matures more quickly than anticipated and reveals abilities before anyone feels ready is how one researcher described the experience.

An air of controlled urgency permeates the scene as it develops.

The emotional reality underlying the technical work is revealed in small moments. Lights are still on in some areas of the lab late at night, reflecting off of deserted corridors. Over a chair is a jacket that has been forgotten. Continuously scrolling lines of code on one screen are conducting experiments that could change the way machines interact with the outside world.

These are moments that no one shouts about.